Explore how artificial intelligence is reshaping news delivery, content accuracy, and digital trends. This article delves into the evolving landscape of AI-powered journalism and the surprising implications for how people consume and trust news content every day.

AI and the Rapid Shift in Digital Newsrooms

Artificial intelligence (AI) has begun influencing the way newsrooms find, write, and distribute stories. Traditional journalism now often relies on algorithms that monitor global developments in real time, highlighting viral events and emerging topics even before a human editor spots them. This shifting dynamic means news stories can reach audiences faster than ever before. AI curation is helping both large media outlets and smaller digital publications keep pace with the rapid information cycle that defines the digital age. Trending topics, once managed by editors alone, are now constantly updated by predictive algorithms trained to recognize patterns and social engagement.

The reliance on data-driven strategies to select news has tech companies racing to balance speed with accuracy. AI-powered recommendation engines not only personalize headlines for audiences but also learn which issues engage readers. For instance, major platforms use machine learning to adjust their front pages, tailoring article placements for each visitor. This approach promises a more individualized news experience yet also introduces challenges—filter bubbles, where people see only news that confirms their existing beliefs, are a growing concern. Audiences may miss diverse perspectives or trending subjects that do not fit their usual reading habits.

The digital newsroom transformation comes with a need for transparency. Media organizations have started to disclose when stories are partially or fully generated by AI or informed by algorithms. This is not only a matter of ethical practice but also an evolving standard required by many professional news associations. Increasingly, readers expect transparency about how and why content appears on their screens. Newsrooms are actively discussing guidelines for responsible AI use, careful fact-checking, and maintaining the credibility of digital journalism as the technology becomes an integral part of reporting workflows.

Fact-Checking in the Age of Automated Journalism

With AI systems writing news reports and summarizing complex topics, concerns about misinformation and fact accuracy have emerged. Automated news feeds may occasionally generate stories based on unverified content, amplifying errors or biases embedded within source data. News organizations are countering this with innovative approaches, including AI tools dedicated to verification. These algorithmic fact-checkers scan sources, cross-reference data, and flag inconsistencies. They can process massive volumes of information much faster than humans, catching errors that would otherwise go unnoticed until public correction.

Editorial oversight still matters. While AI can handle repetitive tasks and analyze large datasets quickly, trained journalists are vital for applying context and judgment. Many leading publications employ a hybrid model: AI delivers the first draft or points out anomalies, and professionals refine the final product, ensuring both accuracy and tone. This collaborative workflow promises to streamline newsrooms while preserving journalistic standards. Key figures in digital news stress the importance of ongoing training—both for staff and the AI systems themselves, so automation never replaces editorial values.

To further strengthen trust, nonprofit organizations, universities, and international watchdogs are developing open-source databases where AI-generated stories are reviewed for accuracy. These initiatives make it easier for the public to check if coverage of world events, scientific breakthroughs, or political issues has undergone independent assessment. Over time, these fact-checking collaborations have the potential to elevate the overall quality of information online—provided transparency and continual updates remain at the core of AI-powered news delivery.

Personalization’s Benefits and Challenges for Audiences

One of the promises of AI-driven news is tailored content. Machine learning models study user behaviors—what they click, share, or scroll past—to deliver a more engaging news experience. Personalized news feeds help readers discover topics relevant to their interests, location, or profession. This can lead to higher satisfaction, as readers are less likely to feel overwhelmed by content that doesn’t match their needs. Publishers benefit too, with increased loyalty and time spent on site as visitors find more of what they care about most.

Personalization isn’t without trade-offs. Recommender algorithms sometimes promote articles that reinforce readers’ existing opinions, deepening the so-called filter bubble effect. Research shows that repeated exposure to similar viewpoints may reduce awareness of alternative perspectives or critical world developments. Experts in digital literacy encourage the use of diverse sources and critical reading practices, even as AI continues to shape what appears in individual news timelines. Some organizations now offer customizable tools to tune algorithmic recommendations—putting more control back into the hands of the audience.

Transparency about how personalization works is emerging as a best practice. News providers are increasingly offering details about how their AI systems select, rank, and suggest stories. Providing these insights builds audience trust and helps readers make informed choices about their news consumption. As these improvements roll out, the relationship between readers and digital news outlets may become more interactive, collaborative, and grounded in mutual understanding.

Data Privacy and Security in AI-Powered News Platforms

AI-driven news relies on data—lots of it. Every click, page view, comment, or share contributes to the intricate web of user data that powers personalized recommendations and automated newswriting. Safeguarding this information is one of the biggest challenges facing digital media. Data breaches or misuse of reader profiles can undermine public trust, particularly if sensitive habits or preferences are exposed. This risk is prompting news organizations and tech companies to adopt stronger security protocols and transparent data policies.

Safeguards go beyond technical solutions. Regulatory guidelines, such as the General Data Protection Regulation (GDPR) in Europe and similar privacy frameworks around the world, are transforming how platforms manage personal information. New standards require explicit consent for data collection, easy-to-understand privacy policies, and mechanisms for users to control or delete their data. Major news providers have responded with privacy dashboards, allowing readers to see, adjust, or opt out of algorithmic news customization.

Privacy advocates recommend that news consumers regularly review what personal information they share online. Opting to limit tracking or anonymize browsing can provide an extra layer of security. Responsible publishers are leading by example, regularly updating their data handling practices and informing audiences about changes. This vigilance benefits readers and helps ensure the continued growth of trusted AI-powered news services in the digital age.

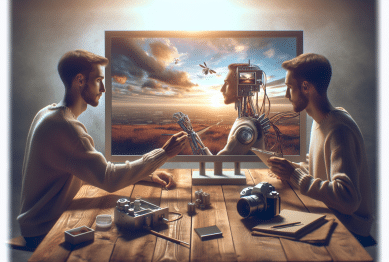

Emerging Trends: AI Ethics and the Future of Journalism

As AI’s influence on news grows, conversations about ethics and responsibility are intensifying. Media professionals and technologists are debating issues such as bias in algorithms, the transparency of editorial decisions, and the right of audiences to understand how AI systems shape what they see. Prominent journalism schools are now offering courses focused on responsible AI, emphasizing fairness, accountability, and human oversight. These programs are preparing a new generation of journalists to understand and navigate the trade-offs of automation in newsrooms.

Public discourse has spurred innovation. News organizations, academics, and tech developers are collaborating on frameworks that promote ethical AI. These efforts include AI audit tools to regularly assess whether news algorithms perpetuate bias, violate privacy, or distort facts. Codes of conduct—voluntary but increasingly adopted—encourage responsible deployment of automated systems, especially when reporting on sensitive subjects or vulnerable groups. These ethical frameworks aim to safeguard the essential values of journalism: accuracy, independence, and public service.

Looking ahead, the evolution of AI in news is expected to bring more interactive, audience-driven models of reporting. Some outlets are exploring conversational newsbots, virtual interviews, and even crowdsourced story selection using AI moderation. These experiments point to a future where journalism is not only more efficient and rich in insight, but also more open and responsive to the needs of a diverse, global audience. As the conversation continues, both readers and reporters are playing crucial roles in shaping how technology serves the public interest.

How to Stay Informed and Engaged in a Changing Media World

The tools and platforms people use to follow the news are evolving rapidly. Staying informed today means understanding both the content being delivered and the invisible processes shaping its delivery. Digital literacy, once focused on spotting hoaxes or evaluating sources, now includes knowledge about how algorithms and AI influence news feeds. Major nonprofits and educational organizations offer resources for readers to navigate these changes and make well-rounded choices about daily news habits.

Proactive readers benefit from exploring news from multiple outlets, seeking a balance between mainstream, independent, and international perspectives. Engaging with media literacy programs can deepen understanding of news production, reveal common misconceptions, and encourage critical thinking about story accuracy and source credibility. Some public initiatives train people to recognize AI-generated content and understand transparency labels, equipping audiences for the realities of modern digital media.

Active engagement is key. Commenting on articles, asking questions, and joining discussions about the technology’s impact all help create a more participatory news culture. As AI continues to advance and change how news is produced, distributed, and consumed, the relationship between journalists, tech creators, and the public grows more important. Informed, curious readers will have a central role in ensuring that evolving news trends continue to serve society’s needs and values.

References

1. Knight Center for Journalism in the Americas. (2022). AI and Automation in Journalism. Retrieved from https://journalismcourses.org/resources/ai-automation-in-journalism/

2. Pew Research Center. (2023). How Americans View Use of AI in News. Retrieved from https://www.pewresearch.org/journalism/2023/03/29/how-americans-view-use-of-ai-in-news/

3. European Commission. (2022). Guideline on Strengthening Data Protection and Privacy. Retrieved from https://commission.europa.eu/strategy-and-policy/policy-areas/justice-and-fundamental-rights/data-protection_en

4. Reuters Institute. (2023). Journalism, Media, and Technology Trends and Predictions. Retrieved from https://reutersinstitute.politics.ox.ac.uk/journalism-media-and-technology-trends-and-predictions-2023

5. First Draft News. (2023). Responsible Reporting in the Era of AI. Retrieved from https://firstdraftnews.org/articles/reporting-responsibly-ai/

6. The New York Times. (2024). How AI Is Changing Newsrooms. Retrieved from https://www.nytimes.com/2024/02/07/technology/ai-newsrooms-journalism.html

Cloud Computing Unpacking Its Daily Impact

Cloud Computing Unpacking Its Daily Impact