Emerging AI news headlines are reshaping the narrative across global media, impacting your digital experiences and work environments. This article uncovers how artificial intelligence developments, regulations, and trends are transforming daily routines and what these changes could mean in the months ahead.

AI Making Headlines: What Drives the News Cycle

The speed at which artificial intelligence appears in the news cycle is remarkable. From breakthroughs in generative models to discussions about ethics in technology, AI has become a permanent fixture not just in technology columns but across politics, finance, and lifestyle reporting. Mainstream media increasingly covers stories about how automated systems fuel social media algorithms, power online recommendation engines, and affect how we access information every day. These stories not only inform but subtly shape public perceptions of what AI is and what it can do, setting the stage for ongoing debates around privacy and personal agency.

What drives this surge in coverage? Recent advances in natural language processing, computer vision, and machine learning have rapidly moved from academic labs to consumer platforms. Major announcements from technology leaders about AI products or updated privacy policies are frequently picked up by journalists eager to highlight how near-future technology may change everything. The success of large-scale AI models in creative arts, search, healthcare diagnostics, and cyber security keeps the conversation alive and evolving. AI’s ever-expanding scope ensures that breakthroughs—such as AI diagnosing diseases or creating realistic images—capture attention, but also drive curiosity and cautious optimism among readers.

Social concerns—such as the use of algorithms to moderate content or spot misinformation—make AI news particularly noteworthy. Topics like algorithmic fairness, bias mitigation, and responsible data practices enter the mainstream dialogue, encouraging the public to ask critical questions. This consistent news focus means that understanding AI trends is no longer optional but central to being an informed digital citizen today. Explore more about why AI remains at the forefront of trends discussed by news agencies and academic institutions alike (https://www.pewresearch.org/internet/2023/10/09/ai-and-human-enhancement-optimism-supervision-and-concerns/).

Understanding Artificial Intelligence: Concepts Behind the Headlines

To understand why AI news dominates trends, it helps to clarify what artificial intelligence means in various contexts. At its core, AI refers to technologies designed to mimic human thinking, learning, and problem-solving. Some forms, like machine learning, learn from patterns in data to make increasingly accurate predictions, such as when your streaming platform suggests a new show. Other forms, such as neural networks, mimic processes in the human brain to analyze images or language with impressive precision. The range of applications includes chatbots, translation tools, fraud detection systems, and digital art generators.

But behind the scenes, AI is more than just a collection of smart gadgets. What grabs news headlines is often the scale: Large language models can process and generate human-like text, while vision systems can scan millions of medical images in seconds. As companies race to create more capable systems, AI research extends into ethics—debating who should control such algorithms and which data sources are fair to use. This is why artificial intelligence news often emphasizes both the positive potential and the limitations of current technology, encouraging conversations about what comes next for both businesses and consumers.

Global think tanks and academic bodies frequently publish reports on emerging AI challenges, fueling long-form journalism and thoughtful analysis. Articles frequently highlight the difference between “narrow” AI that excels at specific tasks (like identifying fraud in transactions) and “general” AI that may someday rival full human reasoning. With every new breakthrough or cautionary tale, the news cycle draws attention to risks and rewards, asking whether the benefits of AI outweigh concerns for privacy, bias, and accountability (https://www.brookings.edu/research/artificial-intelligence-and-the-future-of-work/).

Regulation and Policy Trends: How Governments Respond

News coverage increasingly examines regulation and policymaking in the artificial intelligence space. Governments and international agencies deliberating how best to guide, monitor, or limit the use of automated systems often make headlines. Prompted by stories of AI mishaps or biased outcomes, legislative bodies propose bills on data transparency, explainability, and consumer rights. Some countries have formed dedicated task forces to study AI’s impact, ensuring that developers consider public well-being and economic stability at every turn.

This surge in regulatory activity triggers robust discussion in the news. For example, initiatives like the European Union’s proposed AI Act aim to classify potential risks and enforce new compliance standards. In the United States, federal agencies are coordinating cross-sector responses to address AI’s influence on economic policy, labor, healthcare, and security. These efforts help set international norms and encourage global collaboration. With each regulatory milestone, more attention is paid to how companies adapt and how these legal efforts might affect products consumers use every day.

Civic groups and advocates also play a role by informing the public through op-eds and analysis. The main themes? Protecting user privacy, requiring impact assessments, and ensuring that automated systems remain transparent and auditable. As legal frameworks evolve, news outlets track how affected industries respond: from recruiting AI compliance officers to creating internal review boards. Tracking regulation trends in AI offers valuable insights on what leaders and innovators prioritize each year (https://ec.europa.eu/commission/presscorner/detail/en/ip_21_1682).

Impacts on Work: Adapting to AI-Powered Changes

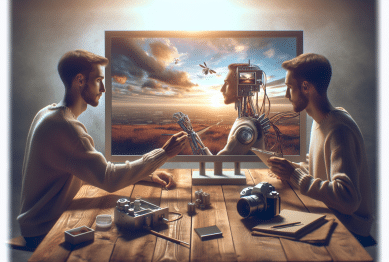

Workplace trends make frequent AI news headlines. Automation has transformed repetitive processes in manufacturing, logistics, customer service, and even creative fields. Where humans once handled routine scheduling and data analysis, AI can now take over, freeing up people for more strategic or creative roles. This shift generates both excitement and anxiety, as debates about job displacement and job creation intensify. Many news stories now focus on upskilling—helping workers better understand and work alongside these systems.

AI is also helping redefine what productivity means. In offices around the world, language models draft emails and summarize meeting notes, while intelligent assistants organize complex schedules. In scientific settings, algorithms analyze vast data sets to identify trends that would be impossible for any one individual to spot. By reporting on these innovations, the media highlights how quickly expectations are shifting for workers and managers alike. This transformation raises questions about digital literacy, training, and what roles will remain uniquely human for the long term.

Academic research and economic think tanks frequently offer insight into the evolving labor landscape. Reports often suggest that collaboration between technology and talent is the most sustainable path forward. News articles note rising interest in lifelong learning platforms, coding bootcamps, and AI literacy as organizations look to support a smooth transition for their workforce. Understanding these impacts helps individuals and businesses make sense of rapid workplace changes and anticipate future directions (https://hbr.org/2023/03/what-ai-can-and-cant-do-in-the-workplace).

AI Ethics, Privacy, and Bias: Why These Stories Matter

The ethical dimensions of AI development and deployment attract ongoing news coverage. Reporters tackle questions such as: Who trains the algorithms? Whose data is used? Are the outcomes fair across different user groups? These stories often spark debate about digital privacy and algorithmic transparency. Major publishers and research organizations release regular analyses on AI bias—whether intentional or unintended—and the social consequences that follow.

Recent investigations have shown that bias can enter systems at many points: during data collection, model training, or even user interface design. Coverage on these issues encourages new standards of accountability for tech companies, highlighting the need for diverse research teams and transparent documentation. News coverage around this topic reinforces that AI is not neutral; it reflects choices made by developers, institutions, and society at large.

The push for ethical design and deployment is gaining ground worldwide. Regulatory agencies, nonprofit advocacy groups, and academic experts weigh in through white papers and media interviews, demanding that fairness and privacy protections be built into every stage of development. As a result, companies increasingly invite outside audits or create open channels for reporting algorithmic issues. Following cuts to data privacy budgets or revelations of bias, the news media often highlights corrective actions taken by industry leaders (https://www.technologyreview.com/2021/05/21/1024898/the-problem-of-bias-in-ai/).

AI Trends for Consumers: Everyday Technology in Focus

For consumers, much of AI news feels personal: Smart home assistants streamline daily chores, personalized news feeds curate digital experiences, and voice-activated shopping is on the rise. But beyond convenience, news outlets question what happens to all the data these devices collect and the invisible ways recommendations shape decision making. Features like facial recognition and location tracking boost functionality for some but raise privacy concerns for others. These consumer-facing developments are tracked closely by journalists and watchdog groups.

The latest consumer AI trends include wearable health trackers, language translation earbuds, and virtual customer support bots available on shopping platforms. As adoption grows, more resources become available to help people understand the pros and cons before choosing new hardware or apps. Advice columns, informed by both tech analysts and consumer protection agencies, are increasingly found in major publications, helping individuals sort through options and make informed decisions. By following AI trends, consumers can better appreciate not just how these tools work but how they influence daily life and choices.

Another fast-growing area is AI-powered education. Schools and online platforms use customizable learning systems that adapt to each student’s needs. News coverage examines both the benefits and pitfalls, from improved outcomes to questions about equitable access. Readers looking for practical advice or considering new tech purchases benefit from following reputable reviews and regulatory updates. Understanding these trends lets readers anticipate what’s next for technology in their everyday environments (https://consumer.ftc.gov/consumer-alerts/2023/06/artificial-intelligence-and-your-consumer-rights).

References

1. Funk, C., Kennedy, B., Hefferon, M., & Johnson, C. (2023). AI and Human Enhancement: Optimism, Supervision and Concerns. Pew Research Center. Retrieved from https://www.pewresearch.org/internet/2023/10/09/ai-and-human-enhancement-optimism-supervision-and-concerns/

2. West, D. M. (2018). Artificial Intelligence and the Future of Work. Brookings Institution. Retrieved from https://www.brookings.edu/research/artificial-intelligence-and-the-future-of-work/

3. European Commission. (2021). Europe Fit for the Digital Age: Commission proposes new rules and actions for excellence and trust in Artificial Intelligence. Retrieved from https://ec.europa.eu/commission/presscorner/detail/en/ip_21_1682

4. Davenport, T., & Mittal, N. (2023). What AI Can and Can’t Do in the Workplace. Harvard Business Review. Retrieved from https://hbr.org/2023/03/what-ai-can-and-cant-do-in-the-workplace

5. Hao, K. (2021). The problem of bias in AI. MIT Technology Review. Retrieved from https://www.technologyreview.com/2021/05/21/1024898/the-problem-of-bias-in-ai/

6. FTC. (2023). Artificial Intelligence and Your Consumer Rights. U.S. Federal Trade Commission. Retrieved from https://consumer.ftc.gov/consumer-alerts/2023/06/artificial-intelligence-and-your-consumer-rights

Why Digital Privacy Concerns Are Growing Fast

Why Digital Privacy Concerns Are Growing Fast