Artificial intelligence is booming, but as governments race to set new rules, many are wondering how these rapid changes will affect technology, businesses, and everyday life. Dive into this guide to understand what’s evolving in global AI regulation and why it matters for you.

Rising AI Adoption and the Drive for Regulation

Artificial intelligence is now woven into countless aspects of society, reshaping everything from finance to healthcare. Organizations leverage machine learning to detect fraud, optimize logistics, and even guide medical diagnoses. As systems like natural language models and autonomous vehicles grow in sophistication, regulatory bodies are paying close attention. Globally, there is a renewed sense of urgency to ensure that AI technologies develop responsibly and ethically. The proliferation of AI means that regulatory updates appear frequently in technology headlines.

Concern is mounting around data privacy, algorithmic transparency, and potential biases in artificial intelligence systems. News outlets frequently feature debate about how unchecked AI might influence job markets, user privacy, and democratic processes. Recent high-profile incidents—such as deepfakes or biased automated decision-making—have driven more stakeholders, from consumer groups to legislators, to advocate for robust oversight. Many believe that a lack of clear guidelines could lead to misuse or unintended harm, making regulatory clarity a public demand.

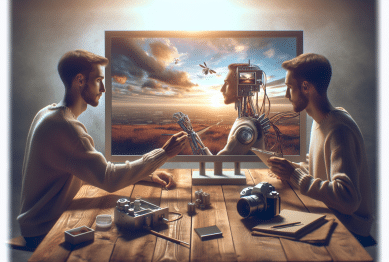

Tech companies, meanwhile, watch closely as legislative landscapes shift. Businesses integrating AI in their products face evolving reporting requirements and increasing scrutiny over data use. In response to both government proposals and public sentiment, many are proactively revising their risk assessments. The ongoing exchange between advocates, regulators, and technology leaders is shaping a framework designed to steer AI development safely and transparently.

What Drives Governments to Act on AI Policy

Regulatory trends in artificial intelligence often reflect a blend of public concern, technological progress, and competitive economics. Countries recognize that AI can improve productivity and bolster innovation, but risks tied to security and fairness frequently headline regulatory conversations. Governments study real-world challenges—such as biased credit scoring or facial recognition controversies—to inform new rules. For instance, the European Union’s draft AI Act includes detailed risk classification systems and transparency measures, aimed at preserving individual rights.

National security and economic stability are major drivers for lawmakers. Policymakers assess scenarios in which algorithmic errors could undermine infrastructure, disrupt economies, or threaten public safety. Some governments even coordinate internationally to build unified frameworks or share lessons. This coordinated approach signals the growing importance attributed to artificial intelligence regulation at a global scale. Economic competition further incentivizes rapid response, as countries race to establish themselves as leaders in responsible AI adoption.

Transparency often emerges as a central goal. Regulators explore how to require that AI systems provide explainable outputs or enable user recourse in the case of mistakes. Oversight commissions also recommend mechanisms for trusted auditing and impact assessments. While policy language varies worldwide, the push to create dependable guardrails for innovation is unmistakable. Attention is also paid to balancing innovation with restricting harmful practices, steering AI toward safe and ethical deployment.

Key Proposals: What Laws and Frameworks Suggest

Drafts of new rules, such as the aforementioned EU AI Act, identify high-risk areas for regulation. These frameworks usually target technologies like facial recognition, biometric surveillance, and tools that impact consumer finance. Most proposals require companies to document how their systems work, maintain robust data governance, and enable human oversight when making critical decisions. Not all countries follow the same approach, but many seek similar outcomes—fairness, accountability, and reduced systemic risk.

Transparency and data privacy are recurring themes throughout AI legislation. Many frameworks ask for documentation on how personal information is handled, as well as methods to identify and address unintended bias. For businesses, compliance often means regular audits, proactive risk mitigation measures, and increased documentation tied to AI model development. Meanwhile, standards bodies recommend tools for independent third-party assessment to confirm compliance.

Another area drawing increased attention is cross-border data transfer. Regulators want to ensure that as AI-powered platforms operate globally, sensitive information is not misused. As part of many recent proposals, there are mechanisms for redress if systems cause harm. This ensures there’s a pathway to investigate and address errors or abuses. Such measures are widely debated but generally draw strong support among consumer advocates and privacy organizations.

Impacts on Business, Innovation, and Everyday Life

The evolving regulatory landscape directly shapes how businesses use artificial intelligence. Many organizations are investing more in compliance, not just to avoid penalties but to build trust with users and partners. Some companies even create dedicated AI ethics teams to monitor the effects of machine learning tools. These changes often stretch across industries, from digital banking to healthcare, affecting product development and innovation speed.

The impact on consumers is subtle but significant. Everyday interactions—from online shopping recommendations to virtual medical consultations—are increasingly governed by rules meant to protect individuals and improve service quality. For instance, clear disclosures on automated decision-making allow users to make informed choices about the technology they interact with. Transparency and the ability to challenge AI-driven decisions are often core requirements in new regulations, offering greater peace of mind in digital experiences.

As rules evolve, innovation adapts. Some researchers argue that thoughtful regulation encourages smarter, safer AI by setting clear incentives for responsible design. Others warn that overregulation could slow breakthroughs. Regardless, the consensus is that the path to widespread adoption relies on a delicate balance—fostering creativity while addressing legitimate risks. The news cycle reflects this tension, reporting both on regulatory successes and industry pushback.

Ongoing Challenges: Fairness, Bias, and Accountability

One of the toughest obstacles for regulators is addressing bias in artificial intelligence. Systems trained on large datasets can inadvertently amplify societal inequalities, leading to flawed decisions in hiring, banking, or law enforcement. As more stories emerge highlighting these risks, calls for strong, enforceable standards grow louder. Fairness audits and accessibility checks are increasingly seen as must-haves for responsible AI deployment.

Accountability also poses a challenge. Determining who should be responsible when things go wrong is not always straightforward, especially for complex, automated systems. Some proposals recommend shared liability or mandatory insurance, particularly in high-risk fields. Discussions about what constitutes acceptable use, and how to trace responsibility, frequently appear in industry analyses and regulatory commentary. News coverage spotlights consumers seeking recourse after automated errors, driving home the need for robust pathways to address grievances.

Societies worldwide continue to debate what fairness looks like in algorithmic systems. Advocacy groups push for strong transparency and participation from diverse stakeholders—including historically marginalized communities. This collaborative approach is intended to strengthen trust while making regulations more effective. As high-profile incidents continue to make headlines, it becomes even clearer why a thoughtful, inclusive process is essential to developing accountable AI systems.

The Future of AI Regulation: What to Watch

The future of artificial intelligence regulation is dynamic and uncertain, shaped by advances in both technology and law. Ongoing research explores new methods for robust model validation, real-time oversight, and public involvement in policy-making. Trends suggest future frameworks will be more adaptive, updating rules as new risks or opportunities are discovered. Many experts predict that cross-border collaboration will become more common, creating a patchwork of harmonized guidelines.

Evolving policy will likely address not just technical issues, but broader impacts on rights, identity, and the workplace. For example, as generative models become more powerful, there are proposals to ensure transparency about AI-generated content. Calls persist for regular review of existing regulations to reflect fast-moving advances. This means businesses and the public will need to stay informed and flexible, continuously adjusting to the latest guidance.

Ultimately, artificial intelligence regulation is a collective experiment. Governments, businesses, technologists, and civil society will each shape how AI is used and managed. For individuals, monitoring these shifts is key to navigating a digital future—whether using AI-powered gadgets or considering how automated decisions affect daily life. The story is still unfolding, and every stakeholder has a role to play in its next chapter.

References

1. European Parliament. (n.d.). Artificial Intelligence Act. Retrieved from https://www.europarl.europa.eu/topics/en/article/20230601STO93804/artificial-intelligence-act

2. Future of Life Institute. (2021). Principles for AI Policy. Retrieved from https://futureoflife.org/ai-policy/

3. U.S. Government Accountability Office. (2021). Artificial Intelligence: Emerging Opportunities, Challenges, and Implications. Retrieved from https://www.gao.gov/products/gao-21-519sp

4. Stanford HAI. (2022). Regulation of Artificial Intelligence. Retrieved from https://hai.stanford.edu/policy/regulation-artificial-intelligence

5. Brookings Institution. (2023). Regulating AI: The New Frontier. Retrieved from https://www.brookings.edu/articles/regulating-artificial-intelligence-the-new-frontier/

6. OECD. (2021). OECD Principles on Artificial Intelligence. Retrieved from https://legalinstruments.oecd.org/en/instruments/OECD-LEGAL-0449

Surprising Factors Impacting Your Home Value

Surprising Factors Impacting Your Home Value