Artificial intelligence continues to transform news and trends across global industries. This article explores how evolving AI applications affect journalism, media reliability, social trends, and ethical debates. Dive into how you might spot misinformation and navigate the digital future with confidence.

What’s Behind the Surge in AI Media Coverage?

Artificial intelligence has become a consistent headline-maker. Whether discussing breakthroughs in generative models or concerns about data privacy, AI dominates both the tech sector and mainstream media conversations. Journalists are rapidly covering new developments, partly because audiences show a growing appetite for analysis on automation, machine learning, and their impacts on society. The volume of AI-related news has dramatically increased, and this surge raises questions about accuracy, bias, and how information is curated.

The complexity of AI systems also means there’s a spectrum of interpretations—some optimistic, others riding a wave of caution. Media outlets often highlight revolutionary use cases: AI-powered medical diagnostics, smart city innovations, or even art creation tools. Yet, nuanced debates around ethics, transparency, and algorithmic bias are just as popular. Staying informed requires more than skimming headlines. Developing a habit to critically assess the sources of such news becomes an essential media literacy skill.

The economic motivations behind widespread AI coverage can’t be ignored. Digital publishers are incentivized to cover trending topics that attract clicks and advertising. As a result, some articles may exaggerate the benefits or pitfalls of AI-driven advances to capture audience attention. This makes it even more important for readers to discern balanced reporting from hype. Exploring diverse sources and analyzing both factual claims and opinions can help maintain a well-rounded perspective on AI’s role in our world.

How Artificial Intelligence Is Transforming Newsrooms

Inside modern newsrooms, artificial intelligence acts as both assistant and disruptor. AI tools now assist journalists in researching information, scanning archives, and even drafting basic reports. Automated fact-checking, content recommendation algorithms, and real-time trend analyses help news organizations deliver rapid updates to their audiences. With data-driven insights, news teams can make decisions about stories that matter most, further sharpening editorial focus for the digital era.

There’s a growing interest in how AI-powered content curation affects the news you see on social networks and search platforms. Recommendation systems learn from user behavior and preferences, often creating personalized content feeds. While this can help surface relevant news, it can also contribute to filter bubbles—where readers only encounter viewpoints similar to their own. Media watchdogs and researchers are paying attention to these shifts, hoping to safeguard against narrowing worldviews.

Some organizations have also experimented with AI-generated news stories, covering topics like local sports, finance, and weather. This frees up human reporters for investigative projects and analysis. However, it also leads to discussions about accountability: Who is responsible if an AI-generated story contains errors? Transparent AI practices and rigorous editorial oversight remain crucial as technology evolves. Ongoing training and ethical guidelines, supported by recognized institutions, shape responsible adoption.

Tackling Misinformation in the Age of AI-Driven Media

Misinformation isn’t a new challenge, but AI can complicate the problem by enabling rapid content creation and distribution. Deepfakes, manipulated audio, and text generated by large language models make it easier than ever for misleading stories to look convincing. Media outlets and fact-checkers leverage their own AI-driven tools to spot deceptions, comparing content across trusted databases to find inconsistencies and flag potential fakes. Digital literacy campaigns, often supported by educational nonprofits, encourage skepticism and verification before sharing news.

Platforms like social networks and video streaming services have introduced automated systems to detect and downgrade misleading stories. However, these systems are not foolproof and sometimes mistakenly suppress legitimate reporting. Public awareness efforts, in collaboration with researchers and global organizations, emphasize checking multiple sources and relying on established outlets for significant news. This combined approach lets the public, journalists, and technologists play complementary roles in quality control.

Ethical questions around digital manipulation will likely grow as AI tools become more sophisticated and accessible. Advocates call for transparency whenever AI tools are involved in creating or editing content. Some newsrooms now disclose where artificial intelligence has played a role, allowing readers to make informed judgments. Policies are evolving quickly, as legal and societal frameworks try to keep pace with the technical realities of misinformation management.

AI’s Influence on Public Opinion and Social Trends

The relationship between artificial intelligence and public opinion is intricate. Algorithms increasingly shape what information people see, amplifying some narratives and muting others. Social media companies use machine learning to boost engagement; this sometimes means sensational stories reach more eyeballs than evidence-based news. Surveys show that people are both fascinated and wary—curious about AI’s convenience, cautious about its potential to accelerate divisions within society. Understanding these dynamics helps readers recognize when technology might sway or reinforce pre-existing views.

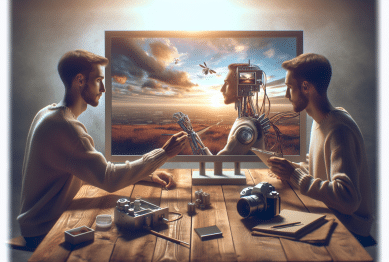

Online influencers and digital trendsetters embrace AI for content creation, using tools that generate images, videos, or even entire scripts. This creativity fuels new forms of entertainment and education but also blurs the line between authentic and synthetic experiences. The popularity of AI-generated art and viral videos demonstrates society’s openness to engaging with digital novelties. Institutions like universities and public broadcasters are producing resources to explain these shifts to everyday audiences, bridging knowledge gaps.

Social trends, from online challenges to activism, often originate in AI-powered ecosystems. Trend forecasting models pick up on viral themes, which companies use for targeted campaigns. As technology’s impact on society intensifies, the call grows for more transparency, oversight, and inclusivity in algorithm design. Studies recommend that diverse voices—across age, culture, and background—should inform AI development, to minimize bias and safeguard pluralism in digital discourse.

Ethical Debates Shaping the Future of AI News

The convergence of artificial intelligence, news reporting, and social change sparks urgent ethical debates. These include concerns over privacy, bias, and accountability. Who decides which datasets train algorithms? How can society ensure AI-powered media is fair and avoids amplifying stereotypes? Universities and nonprofits publish frequent research on these questions, offering public lectures and workshops to keep communities engaged in the discussion.

Legislators and advocacy groups are calling for clearer regulations on how AI affects media production and dissemination. Proposals range from transparency mandates to guidelines for responsible AI deployment. Collaborations between tech companies, policymakers, and civil society form the engine for crafting balanced solutions—ones that encourage innovation while protecting public interest. Regular updates from government agencies and international organizations help track progress and address unintended consequences as they arise.

Ethical journalism guidelines are also adapting, with some newsrooms hosting dedicated ethics boards to evaluate AI usage. The future of AI news may rest on the ability to document processes, reveal limitations, and invite public scrutiny. For audiences, knowing how to evaluate digital information and demand accountability from content providers could be as important as technology itself. This ongoing dialogue ensures that news remains a tool to inform—rather than manipulate—its audience.

How to Build Digital Literacy for Navigating AI News

With artificial intelligence influencing so much of the news cycle, digital literacy is critical. Readers benefit from understanding how algorithms shape their feeds and knowing the difference between automated and human-generated stories. Workshops, online tutorials, and public service announcements offer tips for assessing credibility, questioning sources, and cross-referencing headlines. Educational institutions and nonprofit organizations play a leading role in nurturing these skills for all age groups.

Media literacy programs encourage practical strategies, such as reverse image searches and fact-checking online statistics. Community libraries increasingly offer sessions focused on misinformation detection and evaluating viral trends. Collaborations between government agencies and advocacy groups provide teachers with up-to-date resources, reinforcing the importance of skepticism and accuracy when digesting current events. This approach empowers audiences to act as critical, informed consumers.

Curiosity and reflective thinking are core habits for digital literacy in the AI age. Rather than accepting information at face value, engaged readers seek context and recognize potential biases introduced by both technology and humans. By making use of publicly available guides, such as those from UNESCO and recognized journalism schools, anyone can strengthen their ability to navigate the AI-news landscape safely and confidently.

References

1. UNESCO. (n.d.). Addressing Hate Speech and Disinformation. Retrieved from https://en.unesco.org/fightfakenews

2. Pew Research Center. (2023). Public Perceptions of AI and its Impact on News. Retrieved from https://www.pewresearch.org/internet/2023/07/25/public-views-on-ai-and-news

3. Reuters Institute. (2023). Journalism, Media, and Technology Trends. Retrieved from https://reutersinstitute.politics.ox.ac.uk/journalism-media-and-technology-trends

4. Stanford University. (2022). AI Index Report. Retrieved from https://aiindex.stanford.edu/report/

5. International Federation of Journalists. (2021). Ethical Journalism in the Age of AI. Retrieved from https://www.ifj.org/media-centre/news/detail/category/press-releases/article/new-report-ethical-journalism-in-the-age-of-ai.html

6. Brookings Institution. (2023). Guardrails for AI and Trustworthy Media. Retrieved from https://www.brookings.edu/research/guardrails-for-ai-in-the-news/

Online Degrees That Change How You Learn Forever

Online Degrees That Change How You Learn Forever