Discover why deepfakes are making headlines and how they’re influencing news and trends across social media platforms. This guide unpacks the technology, viral effects, and how users and organizations are responding to synthetic realities in the digital age.

Understanding Deepfakes and What Drives Their Spread

Deepfakes are synthetic media. They use artificial intelligence to create convincing images, audio, or videos that mimic real people. The term ‘deepfake’ is a blend of ‘deep learning’ (a branch of AI) and ‘fake.’ Viral deepfakes often emerge on social networks, sometimes tricking viewers by depicting fabricated celebrity speeches or political statements. The draw? Realism. Modern algorithms generate hyper-realistic details, so casual observers struggle to distinguish fake from real. Misinformation researchers note that the exponential growth of AI-generated content has coincided with more sophisticated social engineering tactics online, blurring the lines between entertainment, parody, and disinformation (https://www.brookings.edu/articles/deepfakes-and-synthetic-media/).

Why are deepfakes proliferating in newsfeeds? Accessibility and curiosity play huge roles. Open-source deep learning frameworks allow anyone to experiment with generative technology. As a result, the barrier to entry is lower than ever. Free tools on the web let creators swap faces or clone voices within minutes. Social media platforms tend to amplify content that grabs attention. Deepfake videos often go viral for their shock value or impressiveness, feeding an ecosystem shaped by user engagement. Even legitimate news outlets sometimes cover trending deepfakes, further amplifying their reach and visibility among the public.

The intentions behind deepfakes vary widely. Some are playful — like face-swapping celebrity memes — while others are malicious, spreading misinformation or manipulating public opinion. With elections, celebrity scandals, and public health news dominating headlines, deepfake technology is increasingly weaponized in information warfare. Researchers and journalists recommend fact-checking unusual clips and watching for known digital manipulation signs, especially in viral news stories. Platforms have begun to develop detection systems, but the arms race between creators and defenders continues across social channels (https://www.niemanlab.org/2023/02/the-battle-against-deepfakes/).

The Technology Behind Deepfakes

Generative Adversarial Networks (GANs) are the engines fueling today’s deepfake revolution. GANs use two neural networks — a generator and a discriminator — locked in an AI arms race. The generator creates synthetic media, while the discriminator tries to tell real from fake. Over many iterations, the fakes become harder to detect. News outlets and tech blogs frequently highlight GAN breakthroughs as machine learning rapidly evolves. The technology is not exclusive to deepfakes; GANs also power advances in art, imaging, and even medicine. However, the ease of use and sophistication now available to amateurs is unprecedented (https://www.hbs.edu/digitalinitiative/blog/the-state-of-deepfake-technology/).

There are several key steps in creating a convincing deepfake. Large datasets of real facial images or voices are used to train the AI. The more data, the better the results. High-powered graphics cards process this information and refine the output, making the synthetic material increasingly lifelike. Sometimes voice cloning is added for a complete digital impersonation. This blend of accessibility and computing power has spurred mainstream experimentation as well as introduce new risks in media and journalism sectors. Technology companies repeatedly update their frameworks to fix vulnerabilities, but the trend toward more robust deepfake creation tools is visible in online forums and developer networks.

Detection tools have had to keep pace. Universities, tech giants, and independent researchers are developing algorithms to spot synthesized content. Several indicators include unnatural eye blinking, odd facial expressions, and mismatched lighting. But as GANs evolve, these traces become harder to spot. International organizations and major media companies have invested in partnerships for deepfake detection and digital forensics, acknowledging both the challenges and the societal impact. The future likely holds even more advanced synthetic media — not just video, but also audio and text. The rapid development of this technology has profound implications across news, policy, and entertainment landscapes (https://www.europarl.europa.eu/news/en/headlines/society/20211013STO14917/deepfakes-the-impact-of-artificial-intelligence-on-democracy).

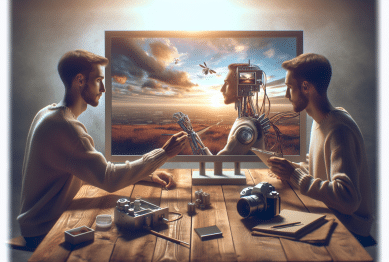

Deepfakes in News, Politics, and Entertainment

One reason deepfakes dominate headlines is their disruptive potential in politics and journalism. Fake political speeches or doctored news clips can circulate widely before corrections emerge, triggering confusion and debate. In some cases, political deepfakes are designed to mimic world leaders or public figures during critical events. Fact-checkers and major news agencies now monitor viral videos with renewed diligence, prioritizing public trust. Understanding the mechanics of synthetic media is central to verifying news sources. Readers can learn to cross-reference major news, check for coverage by reputable outlets, or use digital verification tools task-designed for news consumers.

Entertainment industries have also adopted deepfake tools for more creative purposes. Celebrity deepfakes — such as those recreating classic movie scenes or imagining alternate casting — spark conversations online about digital identity, copyright, and audience engagement. Visual effects studios sometimes employ similar techniques for CGI or de-aging actors. While playful deepfakes generate buzz, platforms are increasingly concerned with the ethical implications. Organizations like the SAG-AFTRA actors’ union work on policy recommendations, while Hollywood studios negotiate technology use in contracts and image rights. Some platforms now label or remove deepfake entertainment content to avoid misleading audiences, especially in advertising or promoted posts.

Cultural and political impacts are wide-ranging. In regions with ongoing elections or civil unrest, weaponized deepfakes have been deployed to influence voter perceptions or sow chaos. Journalistic exposés discuss the use of deepfakes in disinformation campaigns linked to foreign interference or domestic activism. Even satire and parody must now walk a line, as platform standards and legal frameworks attempt to balance free speech with harm reduction. For users, staying informed about the prevalence of deepfakes — and knowing which organizations or newsrooms are prioritizing fact-checking — helps maintain critical engagement in a world where the line between real and synthetic blurs daily (https://firstdraftnews.org/articles/fact-checking-deepfakes/).

How Platforms and Fact-Checkers Respond

Major social media companies have invested heavily in detection and moderation strategies to combat malicious deepfakes. Facebook, Twitter, and YouTube have developed automated systems that attempt to flag or remove synthetic content that violates their guidelines. Fact-checking networks collaborate with these platforms to flag trending videos suspected of manipulation. Flagged content is sometimes labeled, demoted in feeds, or removed altogether. In transparency reports, companies share insights into the surge of deepfake-related moderation events, highlighting both successes and persistent gaps.

Fact-checkers are equally active in the battle against synthetic misinformation. Independent organizations, many linked to universities or media watchdogs, produce swift debunks and explainer articles on viral deepfakes. These teams often use reverse image searches, metadata analysis, and AI detection tools. Educating users about these techniques is a central pillar of media literacy campaigns, encouraging skepticism and verification before sharing provocative videos or audio clips. Public service announcements and school curricula increasingly include instruction on identifying manipulated media.

Governments and NGOs have started to regulate and analyze deepfake dissemination. Some countries propose or enact legislation to prohibit malicious use or require obvious labelling of synthesized media. International collaborations — from the European Union to United Nations — explore best practices and standards to safeguard public discourse. While regulation can lag behind fast-moving technology, evolving laws make it clear that unchecked deepfakes will face more legal scrutiny, especially when used for fraud, harassment, or electoral interference (https://www.unesco.org/en/artificial-intelligence/deepfakes).

Everyday Tips for Navigating Deepfake News

Detecting a deepfake can be tricky, especially as quality improves. Experts suggest watching for inconsistencies in facial expressions, unnatural movement, or glitchy transitions. Verify with a reverse image search or compare with other reputable sources. If a video triggers a strong emotional reaction or arrives without context, it’s wise to pause before sharing. This approach improves information hygiene across the broader community.

Media literacy is the best defense. Schools, libraries, and civic organizations have embraced training focused on spotting manipulated media. Several online platforms offer free tutorials on AI detection and fact-checking techniques. Participating in these programs builds individual skills and helps foster responsible communication habits. Stay updated with trusted fact-checking websites, which often flag viral fakes early and explain their findings in plain language.

Don’t underestimate peer learning. Communities and group chats often play a vital role in surfacing and sharing resources about new deepfake trends. By communicating openly, discussing how to check claims, and being transparent about content origins, groups help reduce the spread of misinformation. If in doubt, look for media labelled as verified by fact-checking organizations or follow guidance from respected news providers and digital watchdogs. Curiosity paired with healthy skepticism builds a safer media ecosystem for all (https://medialiteracynow.org/how-to-spot-a-deepfake/).

Where Deepfake Trends Are Headed Next

Looking ahead, deepfake technology is expected to get even more sophisticated. Real-time video manipulation and AI voice cloning will likely expand into mainstream use. Some experts predict that synthetic media will become integrated into digital identities, storytelling, and even business communications. Ethical debates around personal privacy and consent are heating up as these innovations grow more powerful. Organizations including universities, research centers, and think tanks urge collaborative efforts between technologists, regulators, and the public to manage these changes responsibly.

Emerging trends show that automation will play a growing role in both deepfake creation and detection. Newsrooms are investing in AI-based verification tools, while digital rights advocates stress the importance of transparency. New regulations may require tagging or watermarking of synthetic content. At the same time, artists and activists continue to harness deepfake technology for storytelling, satire, and public education. Balancing innovation with responsibility is a challenge, but the conversation around deepfakes is driving new standards for trust in digital media.

Regardless of where technology goes next, one thing remains clear: digital literacy and cross-sector collaboration are key. Understanding how deepfakes are made, shared, and detected will empower users to make informed choices. For anyone curious about news and media trends, exploring these evolving dynamics is both timely and essential. Deepfakes are here to stay, shaping conversations and influencing perceptions — but informed communities are building defenses. Explore more about this technology and stay ahead of the curve with reputable, up-to-date guidance from the organizations referenced throughout this guide.

References

1. Brookings Institution. (n.d.). Deepfakes and synthetic media. Retrieved from https://www.brookings.edu/articles/deepfakes-and-synthetic-media/

2. Nieman Lab. (2023). The battle against deepfakes. Retrieved from https://www.niemanlab.org/2023/02/the-battle-against-deepfakes/

3. Harvard Business School. (n.d.). The state of deepfake technology. Retrieved from https://www.hbs.edu/digitalinitiative/blog/the-state-of-deepfake-technology/

4. European Parliament. (2021). Deepfakes: The impact of artificial intelligence on democracy. Retrieved from https://www.europarl.europa.eu/news/en/headlines/society/20211013STO14917/deepfakes-the-impact-of-artificial-intelligence-on-democracy

5. First Draft News. (n.d.). Fact-checking deepfakes. Retrieved from https://firstdraftnews.org/articles/fact-checking-deepfakes/

6. Media Literacy Now. (n.d.). How to spot a deepfake. Retrieved from https://medialiteracynow.org/how-to-spot-a-deepfake/

You Can Transform Any Space with Indoor Plants

You Can Transform Any Space with Indoor Plants