Artificial intelligence is everywhere in today’s newsrooms, shaping headlines, fact-checking, and how stories reach people. Dive into this guide exploring how news you encounter is being transformed by smart algorithms, real-time analysis, and evolving journalistic practices. Discover the technology’s surprising influences and what it could mean for your daily information diet.

The Rise of AI in Newsrooms

Step into a modern newsroom and artificial intelligence tools are woven into countless tasks. Algorithms help reporters sift through large data sets, flag emerging trends, and suggest story angles sourced from global conversations. This isn’t about replacing journalists but rather augmenting their skills with extra speed and accuracy. The process of news creation has shifted. Now, data analysis can surface newsworthy events almost as they happen, allowing human writers to spend more time exploring context and depth in stories. As more organizations experiment with AI-powered content generation, the debate continues about how much automation is too much, and which tasks should remain strictly human-led.

AI in journalism isn’t just about creating content; it streamlines operations like transcription, translation, and archiving. Automated systems transcribe interviews, making it easy for reporters to look back through hours of conversations. Translation tools enable global media outlets to offer stories in multiple languages, closing cultural gaps between audiences. Meanwhile, large-scale databases can be indexed and cross-referenced automatically, making historical research faster. The delivery of breaking news has benefited, too. Machine learning models recognize anomalies—say, unusual traffic spikes or trending hashtags—alerting editors early to stories worth investigating. This blend of efficiency and detection helps newsrooms stay competitive in the digital age.

Some newsrooms use AI chatbots to interact with audiences or guide readers to information on specific topics. For instance, these bots can help users explore complex policies or answer questions about big events. By monitoring reader engagement in real-time, AI systems suggest personalized recommendations and optimize content for shifting interests. But AI’s expanding presence is not without skepticism. Concerns around transparency, editorial independence, and loss of nuance have prompted journalists and regulators to seek clear ethical guardrails. Ultimately, newsroom AI is a tool—one that can support deeper reporting, provided its use is informed by responsibility and integrity.

Personalized News Feeds and Algorithmic Curation

Every scroll through a news app or social platform is shaped by algorithms. These digital gatekeepers use machine learning to select which headlines, op-eds, and videos land in your view. By analyzing a user’s location, reading history, and even social media shares, platforms prioritize content likely to catch each reader’s eye. This creates a tailored news feed, meant to keep engagement high. For some, this means seeing local events or breaking global updates first. Others may find themselves drawn into deep-dives on preferred topics—from health innovations to emerging financial trends. While personalization is convenient, it also raises important questions about diversity of information and potential filter bubbles, where audiences only see news that matches their existing beliefs.

Algorithmic curation has significant implications for news consumption patterns. Research shows that people are more likely to engage with stories that align closely with their interests and viewpoints. AI-driven personalization is designed to deliver such content, often increasing time spent on the platform. However, these same algorithms can inadvertently limit exposure to opposing perspectives or lesser-known voices. The power of personalization means decision-makers must consider how their systems might influence public understanding of critical events. Transparency about how news feeds are curated is becoming a standard request, with some organizations sharing details of their recommendation engines and the principles guiding them (Source: https://www.niemanlab.org/2019/09/ai-in-newsrooms/).

Major outlets experiment with hybrid models—combining automated recommendations with editorial picks—to balance efficiency and oversight. For example, a front-page might feature trending stories chosen by algorithms but highlight investigative pieces selected by editors. Some platforms promote news literacy initiatives, helping audiences understand why they see what they see. The shift towards AI-driven curation isn’t slowing. But the call for more transparency, diversified sources, and actionable user controls points to an evolving partnership between human values and smart software. The goal: to inform readers, not just retain their attention.

Speed, Accuracy, and Real-Time Fact Checking

AI supercharges newsroom speed. Breaking news can spread globally within seconds as automated systems monitor and gather data from official sources, television feeds, and millions of social media messages. Natural language processing tools identify emerging trends or inconsistencies, quickly surfacing new leads for editors to investigate. The goal is not only speed, but also accuracy—reducing the risk of spreading misinformation in a race to publish first. Some organizations now deploy AI-assisted fact-checkers. These tools compare new reports against established data and trusted newswires, flagging possible errors or misleading details. The process makes journalists more confident when reporting on rapidly unfolding situations.

Human oversight remains essential. Machines struggle with nuance and context, especially in complex international conflicts or developing political stories. That’s why newsrooms often combine fast, AI-based vetting with expert editorial review. Automated tools help spot factual inconsistencies or outdated information, but humans provide judgment about tone, intent, and fairness. Effective use of real-time verification tools has proven particularly important during natural disasters and emergencies, when rumors can rapidly circulate. In these moments, combining algorithmic scrutiny with seasoned reporting is vital for public trust (Source: https://www.poynter.org/fact-checking/2022/ai-fact-checking-news/).

Large news agencies now integrate AI-powered monitoring into their core operations. Machine learning models constantly analyze text, visual, and audio data for signs of fraud, altered images, or deepfakes. By automating initial vetting, AI frees up journalists to pursue investigative angles or explore wider story ramifications. The growing sophistication of digital misinformation means these tools are becoming a newsroom staple. When used thoughtfully, AI helps maintain accuracy and boosts credibility. Yet ongoing investment in journalist training and digital literacy is equally important to help everyone—reader and reporter alike—navigate the changing news landscape with confidence.

Battling Misinformation and Deepfakes

Misinformation poses a major challenge for today’s media environment. Advances in artificial intelligence have enabled the creation of hyper-realistic images, videos, and audio known as deepfakes. These manipulated media pieces can spread quickly, especially on social networks. News outlets are developing new strategies to respond, employing both automated and manual detection tools to identify altered content before it reaches large audiences. AI-powered software can flag patterns typical of digital tampering or inconsistencies in speech and video. This technology is evolving rapidly in response to a continuously adapting threat.

Journalistic organizations are collaborating with universities and civic groups to advance research in misinformation detection. Tools such as reverse-image search, metadata analysis, and audio forensics are augmented with machine learning to confirm content authenticity. Leading global outlets share resources and findings, building networks for fast response to coordinated disinformation efforts. Media literacy campaigns have surged, teaching the public how to verify sources, recognize clickbait, and get involved in citizen reporting. These efforts make readers part of the solution, increasing the overall resilience of the information ecosystem (Source: https://www.brookings.edu/articles/deepfakes-and-disinformation/).

While deepfakes are concerning, tools to detect and counter them are improving. Some platforms invest in watermarking technologies and transparency labels to help audiences identify authentic sources. Legislation is also evolving as governments debate the balance between free speech and information security. Industry partnerships continue to push for standards in reporting, labeling synthetic content, and sharing best practices. In the end, the fight against misinformation is an ongoing contest between those who seek to manipulate, and the evolving toolkit of responsible journalism. Digital vigilance and cross-sector collaboration remain crucial.

Privacy Concerns and Ethical AI Use in Journalism

The widespread adoption of AI in news comes with serious privacy and ethics considerations. News organizations collect user data to refine content recommendations, study engagement, and develop targeted stories. But how this data is gathered, stored, and analyzed is under scrutiny from privacy advocates and watchdog groups. Many audiences want clear disclosures explaining what information is used and why. Transparent privacy policies are quickly becoming a mark of credibility for digital outlets. Meanwhile, journalists grapple with questions about data security—protecting both sources and readers from misuse or external attacks.

AI-powered news can raise difficult questions about bias in algorithmic decisions. If left unchecked, these biases may influence which stories gain attention or how issues are framed, potentially echoing historical inequities. Responsible AI use requires diverse data sets, regular audits for fairness, and ongoing oversight by humans with strong editorial principles. Professional organizations and universities offer guidelines to help media teams develop ethical policies for AI applications. Maintaining public trust depends on these policies being respected and accessible, not just technical afterthoughts (Source: https://www.cjr.org/tow_center_reports/algorithms-ethics-journalism.php).

Journalists and technologists increasingly work together to create transparency dashboards, open-source tools, and explainers about AI’s newsroom role. Some newsrooms publicly release audit results of their content recommendation systems. Audiences are also given more control over what data they share and how news products adapt to their preferences. These partnerships and initiatives signal a shift towards more participatory journalism, where readers are not just consumers but stakeholders in the evolving news process. Ethical AI isn’t about having perfect answers, but about constantly refining the questions and safeguards that protect everyone’s interests online.

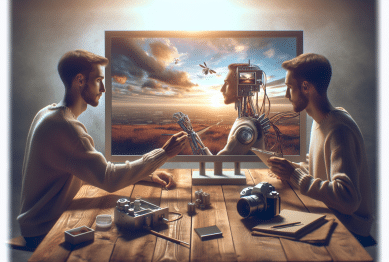

Human Stories in a Digital News Era

Despite automation and machine learning advances, stories with heart continue to draw the largest and most loyal audiences. Human voices shape the context and emotional resonance behind urgent issues, cultural shifts, and local events. AI tools can crunch numbers or surface trends, but only experienced reporters synthesize complex realities into narratives that inspire debate, learning, and empathy. That’s why major news outlets prioritize multimedia reporting—combining personal interviews, immersive visuals, and real-world examples.

The expansion of digital journalism has enabled broader participation. Community reporting, independent blogs, and micro-podcasts offer new entry points for diverse voices. AI plays a role in helping these smaller producers reach audiences through content optimization and social media analysis. However, meaningful engagement requires an intentional effort to preserve authenticity, representation, and cultural context. As readers, many people favor stories where technology’s reach is balanced by editorial sensitivity and real life experience (Source: https://www.reutersinstitute.politics.ox.ac.uk/risj-review/ai-and-journalism-how-it-being-used-and-benefits-and-challenges).

Looking ahead, the partnership between humans and machines is expected to deepen. Editorial oversight will shape how algorithms evolve, grounding new technology in core journalistic values. Training programs, mentorship, and open dialogue about AI’s role in reporting help keep storytelling smart, but also principled. As digital transformation accelerates, the stories that stick will be those that balance innovative technology with the timeless craft of authentic journalism.

References

1. Shearer, E. & Grieco, E. (2021). Automation in News: The Latest Trends and Implications. Retrieved from https://www.pewresearch.org/journalism/2021/08/04/newsroom-automation-trends/

2. Napoli, P. M. (2019). Algorithmic Journalism and Digital Newsmaking. Retrieved from https://www.niemanlab.org/2019/09/ai-in-newsrooms/

3. Mantzarlis, A. (2022). AI’s Role in Fact Checking. Retrieved from https://www.poynter.org/fact-checking/2022/ai-fact-checking-news/

4. West, D. M. (2020). Deepfakes and Disinformation. Retrieved from https://www.brookings.edu/articles/deepfakes-and-disinformation/

5. Diakopoulos, N. (2019). Algorithms and Ethics in Journalism. Retrieved from https://www.cjr.org/tow_center_reports/algorithms-ethics-journalism.php

6. Beckett, C. (2020). AI and Journalism: How It’s Being Used and the Benefits and Challenges. Retrieved from https://www.reutersinstitute.politics.ox.ac.uk/risj-review/ai-and-journalism-how-it-being-used-and-benefits-and-challenges

Signs Mindfulness Can Shift Your Mental Wellbeing

Signs Mindfulness Can Shift Your Mental Wellbeing