Artificial intelligence quietly transforms modern news and trends, often in ways rarely noticed. This article explores how AI powers the headlines you read, shapes the way you access information, impacts digital journalism, and introduces fresh ethical debates into the media landscape.

Emergence of Artificial Intelligence in News Media

Artificial intelligence has become an increasingly integral part of the news ecosystem. Initially, newsroom adoption was slow due to technological uncertainty and concerns about data security. However, recent years have seen a rapid integration of machine-learning algorithms and natural language processing. These technologies power everything from article recommendations to automated story generation. The influence is subtle but powerful: readers may not even notice when AI is behind their daily news feeds. As a result, both individual journalists and large media houses rely on AI-driven tools for research, trend analysis, and content curation (see Nieman Lab).

Several leading digital outlets now use AI to produce real-time news briefs, categorize breaking stories, and identify patterns from vast data sets. This has helped newsrooms respond to trending topics with unprecedented speed. Algorithms scour social platforms and databases for significant developments, providing journalists with early insight before stories go viral. The result? Audiences receive more timely, relevant, and tailored content, matched closely to evolving interests and global happenings (see Poynter).

Despite these advances, there remains an ongoing debate about the role of AI in distancing the human element from journalism. Some worry that audience connections could weaken when stories are produced or selected by machines instead of people. The balance between technological innovation and editorial integrity is now a key focus within industry conversations. In the end, media organizations that blend automation with human oversight tend to achieve the most trusted and engaging results.

Personalized News Delivery: Benefits and Dilemmas

Today’s news experience is shaped by personalized delivery—a direct result of AI’s data-processing ability. Machine-learning algorithms analyze reading habits, location, and interaction patterns, creating unique news streams for every user. This personal touch increases engagement and helps users sift through the massive volume of global news stories. Customized feeds mean fewer irrelevant articles, fostering a sense of relevance with each scroll (see Pew Research Center).

However, personalization isn’t without risks. Algorithm-driven curation can reinforce existing preferences, creating echo chambers where users encounter only viewpoints similar to their own. While convenient, this limits exposure to diverse perspectives and can skew perceptions of major events. Newsrooms and social platforms alike now face increased scrutiny over the transparency of these algorithms. AI ethics in media, therefore, involves a balancing act between delivering relevant content and ensuring a well-rounded informational diet for society.

Efforts to address these challenges include algorithm audits, disclosure of personalization practices, and the introduction of counter-bias mechanisms within digital tools. Transparent communication about how news is selected has proven crucial in building reader trust. Users now expect a say in the types of stories they receive, and some platforms allow manual customizations to diversify news input. The conversation around AI-curated news continues to evolve, with equity and accountability at its core.

Impact of Deepfake Technology and Visual Manipulation

Artificial intelligence is not only shaping written journalism; it’s transforming audio and visual news media in equally dramatic ways. The emergence of deepfake video and photorealistic image synthesis tools has introduced both impressive creative opportunities and serious risks. These AI tools can seamlessly generate or alter footage to show events that never occurred, blurring the line between fact and fiction. In some cases, news outlets harness AI-based content creation to enhance multimedia storytelling. Yet, the very same technology can enable misleading visuals and counterfeit content (see Brookings Institution).

This raises new ethical dilemmas for journalists and audiences alike. When photo evidence can be fabricated with minimal effort, verifying authenticity becomes a central challenge. Newsrooms are now investing in AI-driven image and audio verification tools to detect manipulated content before it’s published. Public education about deepfakes forms a crucial part of this defense, empowering people to question extraordinary visuals or celebrity appearances that circulate online.

Technological arms races often accompany such developments. As AI detection algorithms improve, deepfake tools become more sophisticated as well. Media literacy initiatives are expanding to cover not just critical reading, but also critical viewing. The stakes are high, since visual misinformation can influence everything from election outcomes to public safety. As a result, ethical news production now relies on multi-layered safeguards, combining human expertise with algorithmic screening.

AI and the Acceleration of Real-Time Reporting

One major advantage of AI in newsrooms is the capacity for real-time reporting. High-frequency data collection from financial markets, global weather systems, or disaster alerts gets fed instantly to news platforms. Algorithms parse these feeds and draft initial bulletins within seconds. The result is faster public awareness, especially for breaking events and emergencies (see Pew Research Center).

This speed allows newsrooms to outpace misinformation spreads, as verified details arrive before rumors take hold. Automated fact-checking tools are instrumental in providing on-the-fly corrections, reducing the risk of viral disinformation. Nevertheless, excessive automation in live updates poses its own risks—errors can propagate widely before human review occurs. Responsible editorial teams maintain oversight, always validating major alerts before distribution.

Beyond crisis coverage, AI also leverages archives and trending social conversations to spot early signals of cultural shifts. Stories about societal changes, scientific breakthroughs, or market volatility can be surfaced sooner—sometimes before they even trend on social channels. As a result, journalists spend more time on analysis and less on manual data collection. The digital news cycle accelerates, while retaining possibilities for deeper coverage and investigative reporting alongside rapid updates.

Challenges and Ethical Considerations of AI-Powered News

As AI technologies grow more advanced, journalists, technologists, and policymakers face significant ethical questions. Who is responsible if an algorithm amplifies false narratives or unintentionally omits critical voices? Ownership, accountability, and representation remain central issues. Media companies are forming ethics boards and partnering with independent watchdogs to shape fair AI deployment in newsrooms (see Tow Center for Digital Journalism).

Transparency is a key demand. Audiences increasingly want to know whether stories were suggested or written with help from artificial intelligence. Some outlets now label AI-generated articles, while others provide overviews of their data and technology policies. In some cases, collaborative guidelines propose regular audits of editorial algorithms and ongoing public consultation about automation standards. These practices help foster trust, but there’s no universal solution yet.

Continued innovation in AI for the news sector requires vigilance and adaptation. Overly narrow algorithms risk reinforcing systemic bias, while unchecked data use can raise privacy concerns. Newsrooms aim to strike a balance—leveraging computational journalism to inform communities, while preserving editorial oversight and audience trust. Ultimately, the future of AI in news depends on a mix of transparent policies, technological sophistication, and human-centric values that reflect society’s needs.

The Future Outlook for Artificial Intelligence in News

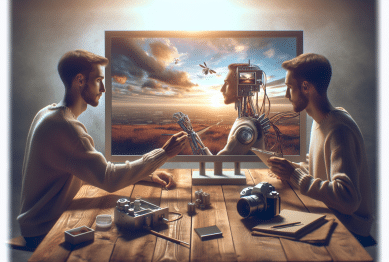

Looking ahead, AI’s influence on news and trends is set to deepen further. Automation will enable greater scalability, allowing media outlets to cover more stories, more regions, and reach diverse audiences. Interactive formats, such as voice-activated news briefings and AI-powered summaries, will likely become mainstream. Yet, authentic storytelling remains at the heart of journalism, and human creativity will guide the most meaningful narratives (see Knight Foundation).

Research is underway into developing AI systems that understand context, nuance, and cultural signals. These advanced models could support investigative journalism by parsing large databases for hidden patterns or anomalies missed by human analysis. The challenge will be to ensure these tools amplify transparency and uphold journalistic values—not merely optimize attention or revenue streams. Meanwhile, new roles emerge in journalism: data curators, AI ethicists, and algorithmic trainers who bridge technology and editorial missions.

Public engagement will remain central. Readers play an active part in shaping how artificial intelligence is adopted in newsrooms—through feedback, advocacy, and awareness. Ultimately, the dynamic between human-led reporting and machine-powered analysis is here to stay. News consumers can expect a richer, more relevant information diet, while media organizations continue to innovate responsibly in one of the fastest-moving fields today.

References

1. Simonite, T. (2022). How Artificial Intelligence Is Influencing Journalism. Retrieved from https://www.niemanlab.org/2022/08/ai-in-newsrooms/

2. Grinberg, E. (2023). AI-Generated News and Its Implications. Poynter. Retrieved from https://www.poynter.org/tech-tools/2023/how-ai-generated-news-is-changing-journalism/

3. Pew Research Center. (2020). News Personalization in a Digital World. Retrieved from https://www.pewresearch.org/journalism/2020/07/09/news-personalization/

4. West, D. (2023). Deepfakes and Synthetic Media: The Next Big Threat to News. Brookings Institution. Retrieved from https://www.brookings.edu/research/deepfakes-and-synthetic-media/

5. Anderson, M. (2020). Real-Time News Reporting in the Digital Age. Pew Research Center. Retrieved from https://www.journalism.org/2020/09/08/real-time-news-digital-age/

6. Bell, E. (2022). Rethinking AI in Journalism: Safety and Ethics. Tow Center for Digital Journalism. Retrieved from https://www.towcenter.org/research/rethinking-ai-journalism-safety-ethics/

Secrets You Never Knew About Microneedling

Secrets You Never Knew About Microneedling