Curious about why AI-generated news seems to be everywhere? This guide explores recent trends in automated journalism, its impact on credibility, newsrooms, digital ethics, and what it might mean for the future of news reporting, all using real-world examples and authoritative sources.

AI-Generated News Is Becoming Impossible to Ignore

The increasing reach of AI-generated news isn’t subtle—it feels as if every scroll or click reveals another headline crafted by algorithms. What’s fueling this moment? Major newsrooms are adopting automated journalism tools to generate content for sports scores, financial updates, and even local crime reporting. This shift enables rapid delivery of information but raises vital questions about credibility, transparency, and oversight. AI-generated news stories can appear in feeds at record speeds, providing the latest updates almost instantly, but accuracy and quality must remain priorities.

One factor driving the adoption of AI in newsrooms is audience demand for up-to-the-minute information. As consumers crave continual updates, human writers alone struggle to compete. Machine learning models take structured data—like sports statistics or stock prices—and format it into readable narratives, saving journalists precious time. Large-scale publishers experiment with these techniques to increase engagement without overwhelming staff, demonstrating how efficiency shapes the industry.

However, this rise sparks challenges. Critics highlight how reliance on AI-generated news can amplify errors or biases embedded in underlying data sets. The subtle reach of algorithmic reporting prompts readers to wonder: ‘What stories are being missed or misreported?’ Investigating these concerns allows both publishers and their audiences to stay vigilant as the field evolves. Public interest in media literacy and the ethics of automated reporting is now growing faster than ever.

Inside the World of Automated Journalism Tools

Automated journalism—sometimes called robot reporting—employs natural language generation (NLG) software to transform raw information into news stories. Giants like The Associated Press and Reuters use these systems for earnings reports, earthquake updates, and election results. These NLG tools are capable of scanning huge data sets and producing articles in minutes, feeding digital platforms and apps instant content. Newsroom managers appreciate how automation frees up reporters for analytical or in-depth stories.

Natural language models process inputs using sophisticated algorithms. For instance, AI models can track company press releases, convert numbers into plain English, and spot basic anomalies across financial filings. Journalists review or polish these reports before publishing, ensuring that automated content maintains editorial standards. Still, concerns about error rates and context loss in AI-written news persist, especially when subtle data interpretation is required.

AI-generated news also affects digital publishing economics. Automated content keeps news sites updated with minimal costs, addressing the massive appetite for digital-first reporting. While robot reporting can boost audience engagement, it also leads to questions about authorship, intellectual property, and the shrinking pool of entry-level newsroom roles. The line between human and computer-written news keeps blurring, making transparency and ethical guidelines crucial for trust.

Impacts on Credibility, Misinformation, and Trust

Crowds of readers are cautious about believing every AI-written update they encounter. Misinformation and fabrication are serious concerns. Several studies and journalism watchdogs have found that algorithmic stories can repeat errors or misunderstand context, unintentionally spreading misleading narratives. Some platforms label or watermark AI-generated stories to maintain trust and distinguish human-reviewed pieces from fully automated reports. Readers are learning to scrutinize bylines and platform disclosures as part of the news consumption routine.

Media experts stress the importance of editorial oversight in preventing or correcting potential errors. While automation accelerates news cycles, human editors play a critical role in reviewing sensitive or ambiguous topics. Errors in AI-generated news—especially about health, finance, or public safety—can have ripple effects. Newsrooms that clearly disclose their use of AI improve credibility and educate their audiences about how digital journalism operates behind the scenes.

The rise of AI-generated news has also fueled misinformation campaigns and content farms aimed at gaming digital platforms for profit. Search algorithms occasionally amplify these misleading stories, causing significant confusion for readers. Initiatives like media literacy campaigns and fact-checking partnerships are now more important than ever. Trust in digital media depends on transparency, robust standards, and ongoing dialogue between publishers, technologists, and the public.

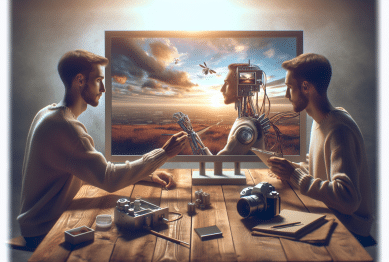

Shifting Roles in Newsrooms and the Journalist’s New Day

The integration of AI-generated news content is transforming how journalists work. Traditional reporting—where professionals investigate and craft every story—now sits alongside a new workflow where automation handles routine updates. In many organizations, staff journalists focus on deep analysis, interviews, or multimedia creation, while AI produces breaking news from structured feeds. This division empowers reporters to spend more time on stories that require context or investigative rigor.

Newsroom management faces unique questions about staff training and ethical responsibilities when embracing automated journalism. Editors must learn to identify algorithmic errors, spot data mishaps, and manage the relationship between AI-driven productivity and journalistic integrity. Some organizations have created new roles—such as ‘algorithmic editor’ or ‘content supervisor’—to navigate these evolving challenges. Adapting to AI-driven workflows remains a work in progress for the news ecosystem.

Journalists are also exploring how to collaborate with AI for original storytelling. Experimentation with tools that generate story prompts, suggest headlines, or highlight trends opens creative avenues. Far from replacing human writers, successful AI-news partnerships allow both parties to contribute their strengths: speed, scale, and accuracy from machines, paired with judgement, empathy, and narrative nuance from humans. The best outcomes emerge from informed teamwork and clear ground rules.

Ethical Dilemmas and Reader Awareness in Digital Reporting

The ethics of AI-generated news are hotly debated in the media world. Key questions revolve around accountability, bias in training data, and the transparency of algorithmic decisions. When an error slips into an automated story, who is responsible—the coder, the editor, or the publisher? Answers are evolving along with new industry guidelines. Ethical frameworks now call for clear labeling of AI content and stronger safeguards against manipulation or plagiarism.

Many media organizations provide public disclosures about the use of AI tools and automated content generation. These transparency efforts help foster trust and highlight accountability. Some newsrooms publish detailed policies on automated news workflows, allowing audiences to review ethical commitments and raise questions. These disclosures also mark a shift towards involving readers in oversight, promoting shared responsibility for maintaining accuracy.

In this rapidly changing landscape, media literacy has never been more essential. Readers need to know how to spot automation, assess information quality, and seek out corrections. Ongoing education initiatives from universities, journalism nonprofits, and digital watchdogs support this quest for awareness. Engaged audiences can help hold publishers to higher standards and champion responsible, credible innovation in digital news.

What the Future May Hold for AI and News Trends

Advances in AI-generated news seem likely to continue accelerating. As machine learning models become more sophisticated, automated reporting could move beyond basic stocks or sports into complex beats like politics, climate, or social justice. However, success depends on finding the right balance between speed, scale, and editorial integrity. Forward-thinking newsrooms now view AI as one tool in a larger kit, complementing—not replacing—human insight.

Digital technology is already changing how readers discover, evaluate, and share news. Search engines, social media, and aggregators all employ machine learning algorithms to filter and amplify specific types of content. Understanding these underlying systems can empower both journalists and audiences to spot patterns, identify gaps, and advocate for greater transparency and accountability in the media landscape. These broader trends will continue to shape public discourse and news consumption habits.

Ultimately, the future of AI-generated news will depend on open standards, collaborative governance, and ongoing public dialogue. News publishers, technologists, educators, and the wider public each have a stake in shaping what comes next. As the field evolves, curiosity and skepticism will remain vital for navigating information overload and setting ethical guardrails. Exploration and adaptation are likely to define the next chapter of journalism trends.

References

1. Carlson, M. (2022). Automating trust: Algorithmic journalism and the rewritten news workforce. Journalism. Retrieved from https://journals.sagepub.com/doi/10.1177/1464884919869005

2. The Associated Press. (2021). AP automated journalism: AI in the newsroom. Retrieved from https://blog.ap.org/announcements/ap-automated-journalism-ai-in-the-newsroom

3. Pew Research Center. (2021). The growing role of AI in content creation and dissemination. Retrieved from https://www.pewresearch.org/internet/2021/06/16/the-growing-role-of-ai-in-content-creation-and-dissemination/

4. Reuters Institute. (2022). Journalism, media, and technology trends and predictions. Retrieved from https://reutersinstitute.politics.ox.ac.uk/journalism-media-and-technology-trends-and-predictions

5. World Economic Forum. (2021). The ethical challenges of AI in journalism. Retrieved from https://www.weforum.org/agenda/2021/05/the-ethical-challenges-of-ai-in-journalism/

6. American Press Institute. (2022). News automation: Promises and perils for local journalism. Retrieved from https://www.americanpressinstitute.org/publications/reports/strategy-studies/newsroom-automation/

Surprising Ways Travel Can Transform You

Surprising Ways Travel Can Transform You